Geometric Computing Laboratory

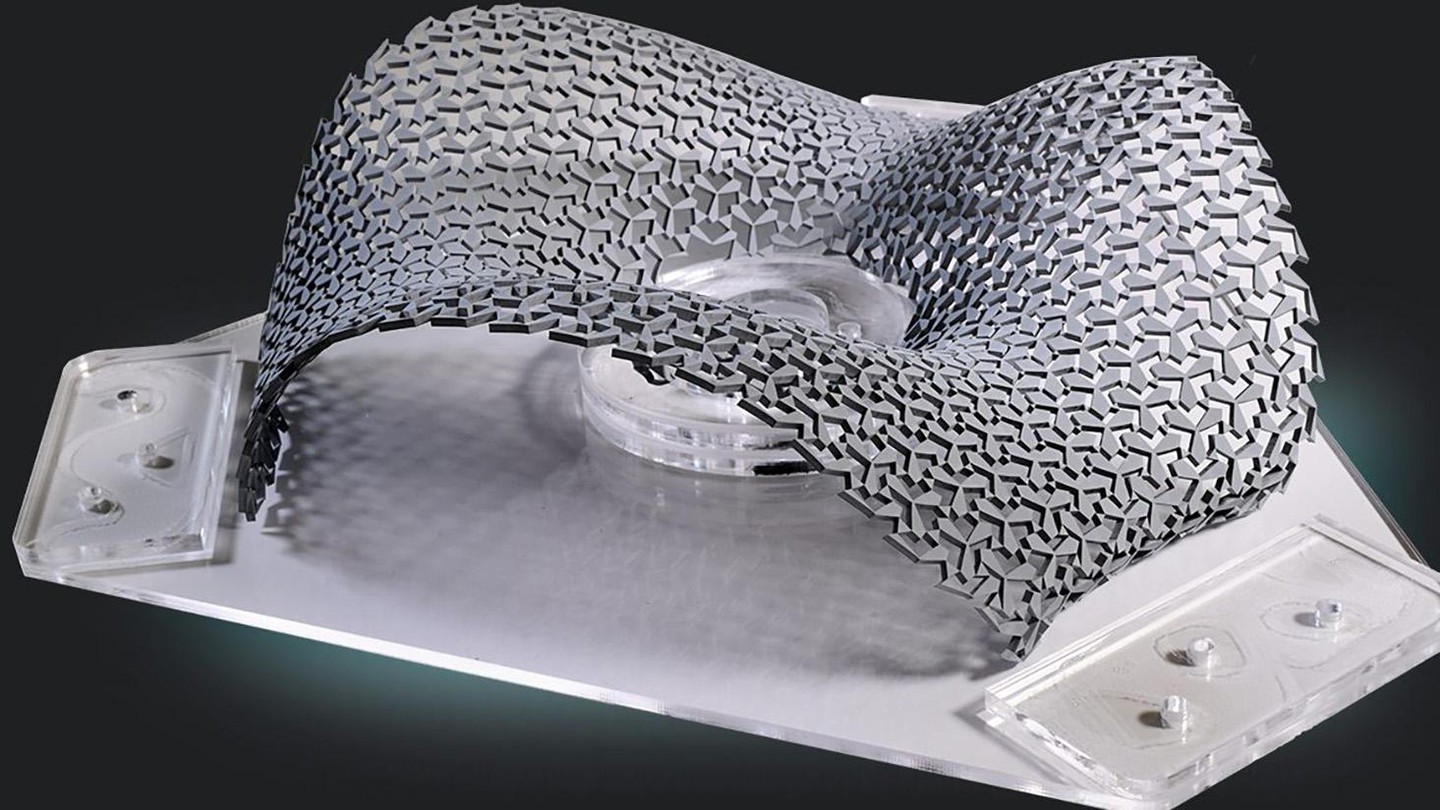

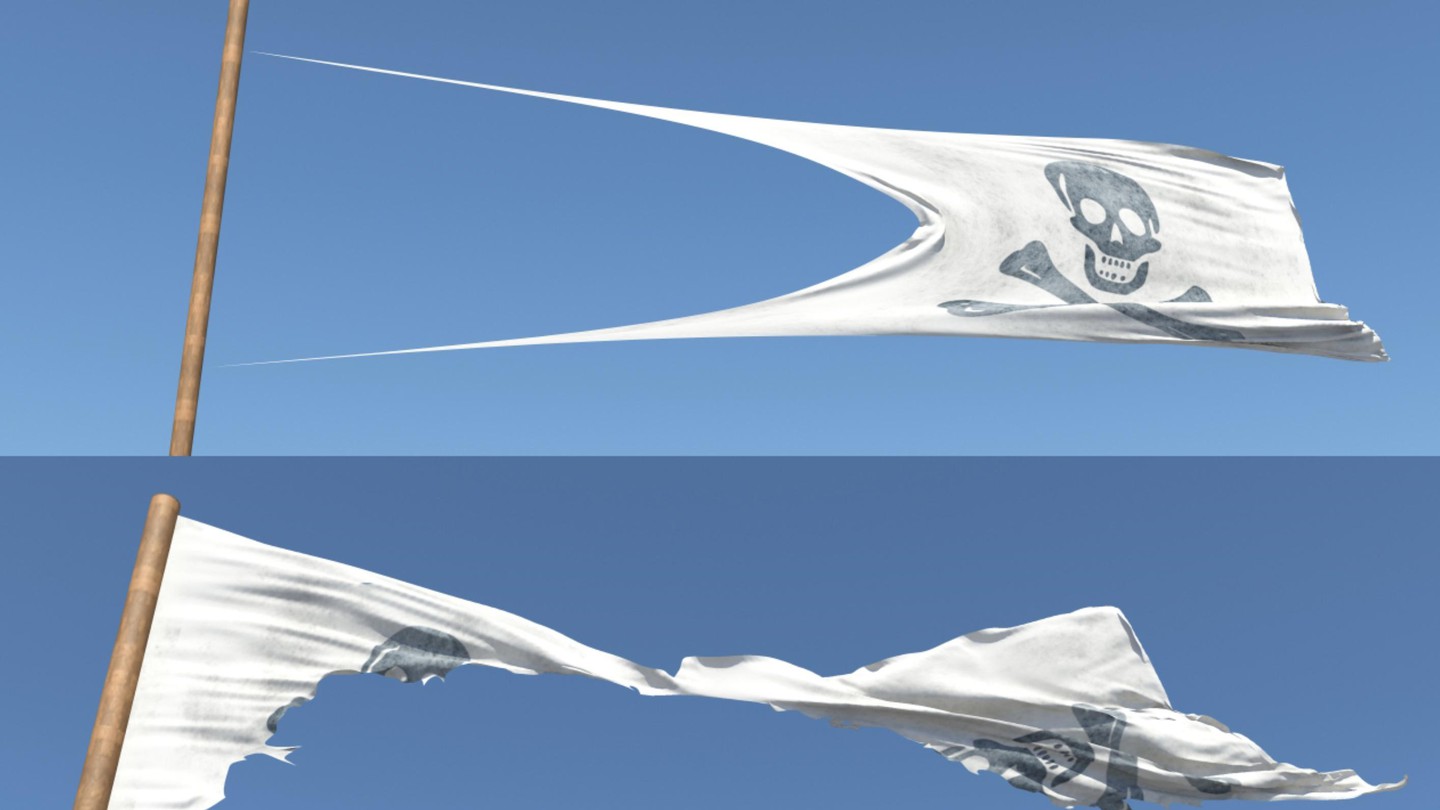

Our research aims at empowering creators. We develop efficient simulation and optimization algorithms to build computational design methodologies for advanced material systems and digital fabrication technologies.

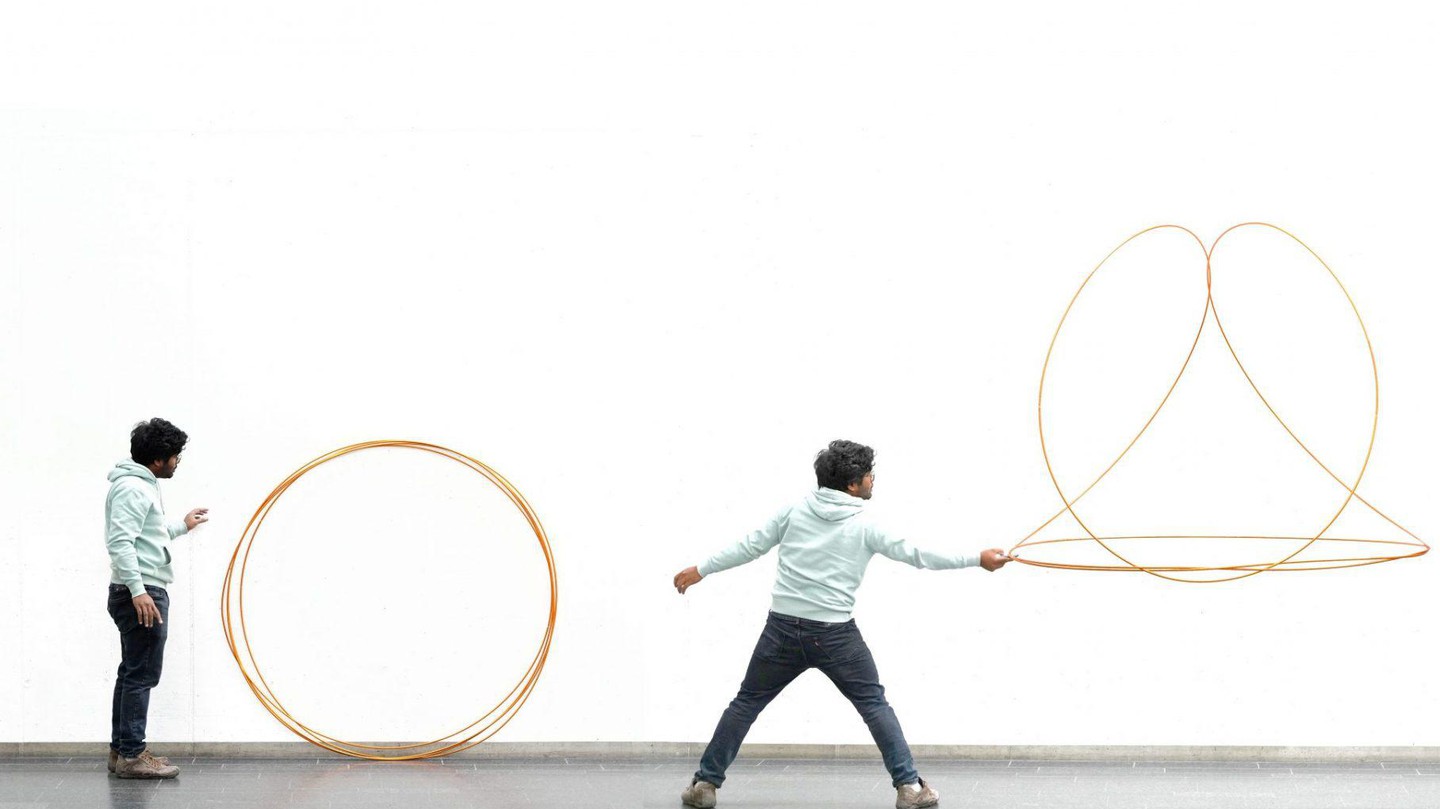

Mathematical reasoning, geometric abstractions, and powerful numerical methods are key ingredients in our work. We pursue a holistic approach in that we design and fabricate functional physical prototypes, collaborate with artists and designers, and engage in industry collaborations to validate and inspire our research.